Tackling ‘Netflix Problem,’ WID Researcher Snags Lagrange Prize

How does mathematics research affect approaches to everyday problems? What is the “Netflix problem” and what does it have to do with math?

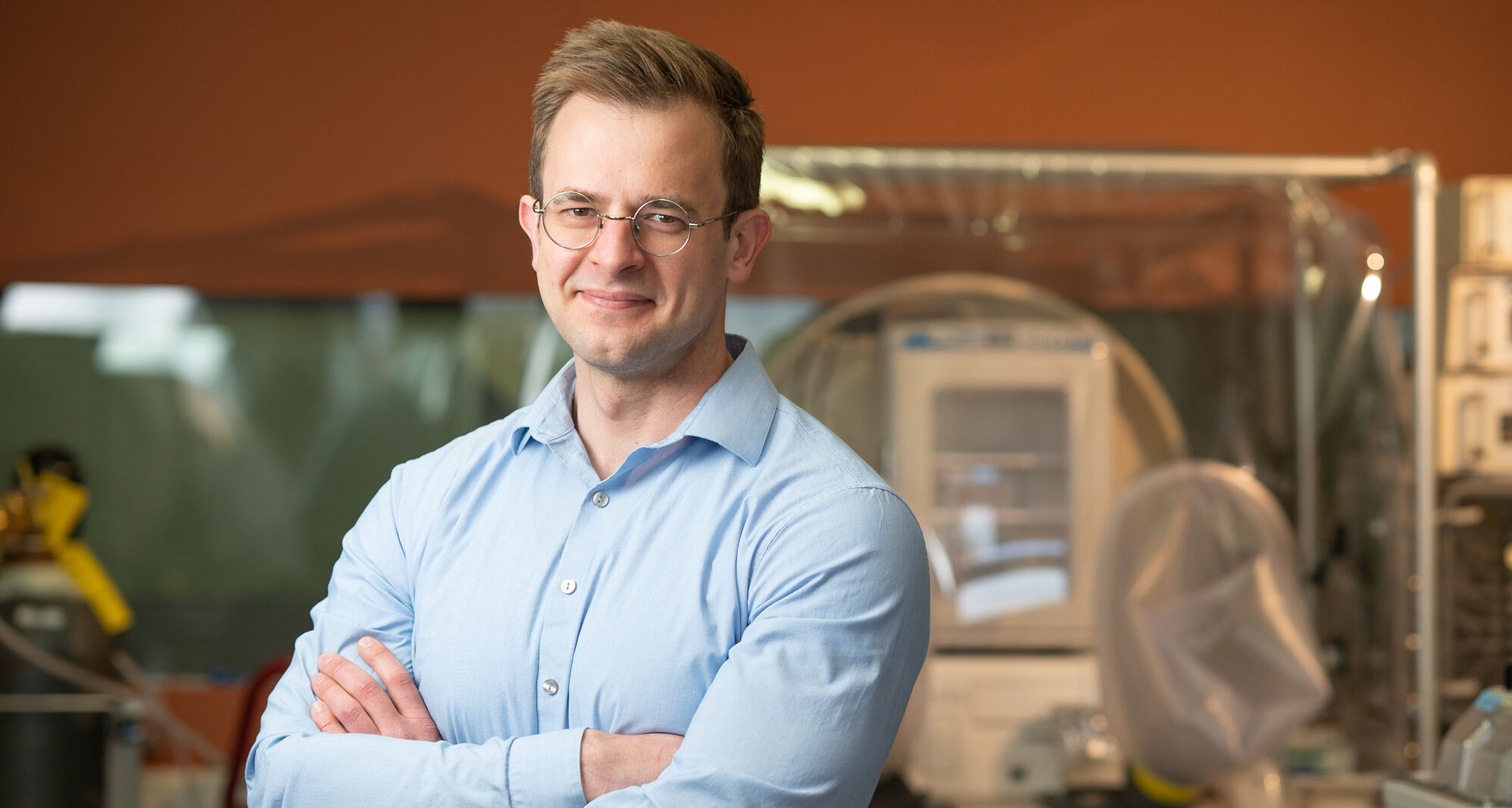

To find out, WID communications sat down with Ben Recht, assistant professor of computer sciences and researcher in the institute’s Optimization group, who recently received the Lagrange Prize in Continuous Optimization for studying the math behind making predictions from incomplete data.

Question: In addition to your other work, what got you started in this line of research?

Recht: In 2006, Netflix announced a prize for $1 million for anyone who could improve its movie recommendation engine. Others and myself started playing around with their datasets to see if they could make improvements.

At the same time, it was the heyday of this new idea called “compressed sensing,” which revisited the foundation of how we acquire data. Take a video, for example. The raw data in a video is huge since you get every pixel for every frame. So, for instance, we store videos using compression, allowing them to be streamed over the airways of the Internet. With compressed sensing, you can combine compression and acquisition, which allows you to acquire medical images faster, make better radar systems or even take pictures with single-pixel cameras. This new model of sensing opened up new direction in engineering and provided a slew of very interesting applied math problems.

So academic discussions in addition to the Netflix problem influenced your work on mathematical compressed sensing?

What we realized was that we could take these tools that were being used in compressed sensing and apply them to completely different problems — like this problem given by Netflix. The problem looks like this: I have a big database, and almost all of my data is missing. That problem, if you just looked at it, was very related to compressed sensing, so we joined the two.

What did you explore in your research, which eventually earned you the Lagrange Prize?

We were able to show that as long as the number of entries was sufficiently large — the number of things you knew about a person was big enough — then you could fill the whole matrix in without seeing any more entries. To give you a feel for that, even if I had a database with a million users and 10 million products, as long as I saw enough products per person, then I could actually predict with very high accuracy what they would be interested in purchasing. For all practical problems that involve people, that number seemed to be between 25 and 100. It’s relying on the assumption that people aren’t unique, even though we like to think we’re all unique and sporadic. Psychologists have found that the number of factors that influence our behavior are fewer than we think. That’s the only assumption we make.

Would you say that you solved the “Netflix problem”?

No. What’s interesting about our solution is that we first confirmed that it was truly possible to fill in the entries if you had a computer that could solve this particular problem. And we provided an algorithm that would achieve the type of predictions we could make. If you look at that algorithm, it turned out to be the exact one that the first person who published on the Netflix prize had.

What have you learned from this prize-winning research?

One of my favorite things about this paper is that the algorithm used to solve the problem was the common sense one. It’s exactly what many talented engineers had proposed as a heuristic, or the most intuitive solution. That’s what I think is the most interesting about the work that I do: showing that simple algorithms and a lot of data are the best solution nine times out of 10.

What does receiving the Lagrange Prize mean to you?

This prize is given every three years for outstanding achievements in optimization from the past six years. I’m completely flattered. The committee is filled with some of the guys who defined the subject, and the fact that they think that this is the best paper in continuous optimization is awesome.

–Interview conducted by Marianne English